1,本文介绍

ResNet(深度残差网络)通过引入“快捷连接”来解决深层神经网络训练中的梯度消失问题。这些快捷连接允许网络的输入直接跳过中间的层,直接传递到后面的层,从而使得网络能够专注于学习输入与输出之间的残差(即差异),而非直接学习复杂的函数映射。这种设计方式使得网络在需要时可以简单地实现恒等映射,简化了训练过程,缓解了深层网络训练中的困难。因此,ResNet 能够有效地训练非常深的网络结构,并在多个视觉识别任务上取得显著的性能提升。

关于ResNet的详细介绍可以看论文:https://arxiv.org/pdf/1512.03385.pdf

本文将讲解如何将ResNet融合进yolov8

话不多说,上代码!

2, 将ResNet融合进yolov8

2.1 步骤一

首先找到如下的目录'ultralytics/nn',然后在这个目录下创建一个'Addmodules'文件夹,然后在这个目录下创建一个ResNet.py文件,文件名字可以根据你自己的习惯起,然后将ResNet的核心代码复制进去。

from collections import OrderedDictimport torch.nn as nnimport torch.nn.functional as F class ConvNormLayer(nn.Module): def __init__(self, ch_in, ch_out, filter_size, stride, groups=1, act=None): super(ConvNormLayer, self).__init__() self.act = act self.conv = nn.Conv2d( in_channels=ch_in, out_channels=ch_out, kernel_size=filter_size, stride=stride, padding=(filter_size - 1) // 2, groups=groups) self.norm = nn.BatchNorm2d(ch_out) def forward(self, inputs): out = self.conv(inputs) out = self.norm(out) if self.act: out = getattr(F, self.act)(out) return out class SELayer(nn.Module): def __init__(self, ch, reduction_ratio=16): super(SELayer, self).__init__() self.avg_pool = nn.AdaptiveAvgPool2d(1) self.fc = nn.Sequential( nn.Linear(ch, ch // reduction_ratio, bias=False), nn.ReLU(inplace=True), nn.Linear(ch // reduction_ratio, ch, bias=False), nn.Sigmoid() ) def forward(self, x): b, c, _, _ = x.size() y = self.avg_pool(x).view(b, c) y = self.fc(y).view(b, c, 1, 1) return x * y.expand_as(x) class BasicBlock(nn.Module): expansion = 1 def __init__(self, ch_in, ch_out, stride, shortcut, act='relu', variant='b', att=False): super(BasicBlock, self).__init__() self.shortcut = shortcut if not shortcut: if variant == 'd' and stride == 2: self.short = nn.Sequential() self.short.add_sublayer( 'pool', nn.AvgPool2d( kernel_size=2, stride=2, padding=0, ceil_mode=True)) self.short.add_sublayer( 'conv', ConvNormLayer( ch_in=ch_in, ch_out=ch_out, filter_size=1, stride=1)) else: self.short = ConvNormLayer( ch_in=ch_in, ch_out=ch_out, filter_size=1, stride=stride) self.branch2a = ConvNormLayer( ch_in=ch_in, ch_out=ch_out, filter_size=3, stride=stride, act='relu') self.branch2b = ConvNormLayer( ch_in=ch_out, ch_out=ch_out, filter_size=3, stride=1, act=None) self.att = att if self.att: self.se = SELayer(ch_out) def forward(self, inputs): out = self.branch2a(inputs) out = self.branch2b(out) if self.att: out = self.se(out) if self.shortcut: short = inputs else: short = self.short(inputs) out = out + short out = F.relu(out) return out class BottleNeck(nn.Module): expansion = 4 def __init__(self, ch_in, ch_out, stride, shortcut, act='relu', variant='d', att=False): super().__init__() if variant == 'a': stride1, stride2 = stride, 1 else: stride1, stride2 = 1, stride width = ch_out self.branch2a = ConvNormLayer(ch_in, width, 1, stride1, act=act) self.branch2b = ConvNormLayer(width, width, 3, stride2, act=act) self.branch2c = ConvNormLayer(width, ch_out * self.expansion, 1, 1) self.shortcut = shortcut if not shortcut: if variant == 'd' and stride == 2: self.short = nn.Sequential(OrderedDict([ ('pool', nn.AvgPool2d(2, 2, 0, ceil_mode=True)), ('conv', ConvNormLayer(ch_in, ch_out * self.expansion, 1, 1)) ])) else: self.short = ConvNormLayer(ch_in, ch_out * self.expansion, 1, stride) self.att = att if self.att: self.se = SELayer(ch_out) def forward(self, x): out = self.branch2a(x) out = self.branch2b(out) out = self.branch2c(out) if self.att: out = self.se(out) if self.shortcut: short = x else: short = self.short(x) out = out + short out = F.relu(out) return out class Blocks(nn.Module): def __init__(self, ch_in, ch_out, count, block, stage_num, att=False, variant='b'): super(Blocks, self).__init__() self.blocks = nn.ModuleList() block = globals()[block] for i in range(count): self.blocks.append( block( ch_in, ch_out, stride=2 if i == 0 and stage_num != 2 else 1, shortcut=False if i == 0 else True, variant=variant, att=att) ) if i == 0: ch_in = ch_out * block.expansion def forward(self, inputs): block_out = inputs for block in self.blocks: block_out = block(block_out) return block_out2.2 步骤二

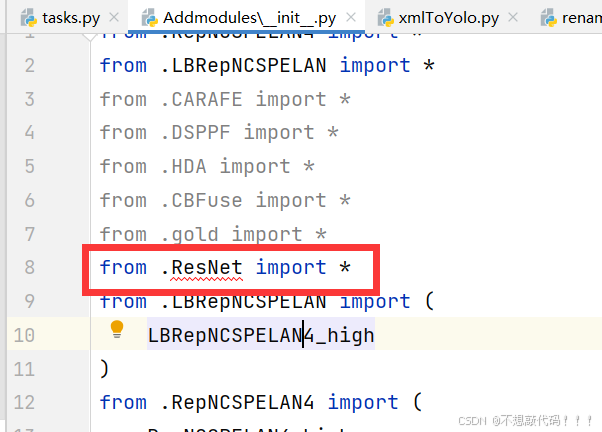

在Addmodules下创建一个新的py文件名字为'__init__.py',然后在其内部添加如下代码

2.3 步骤三

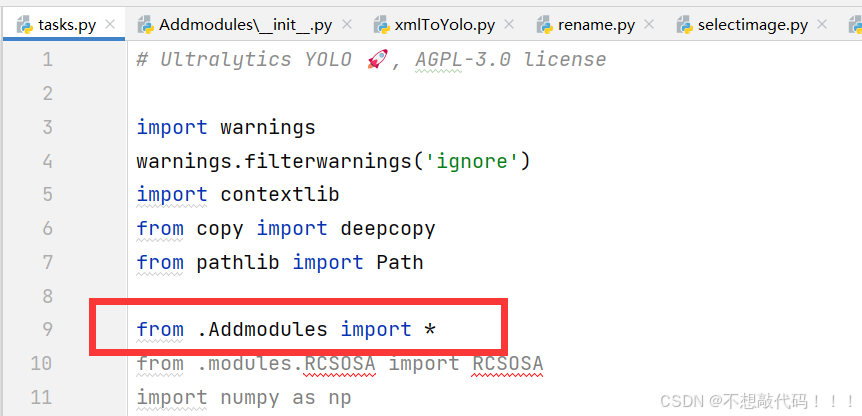

在task.py进行导入

2.4 步骤四

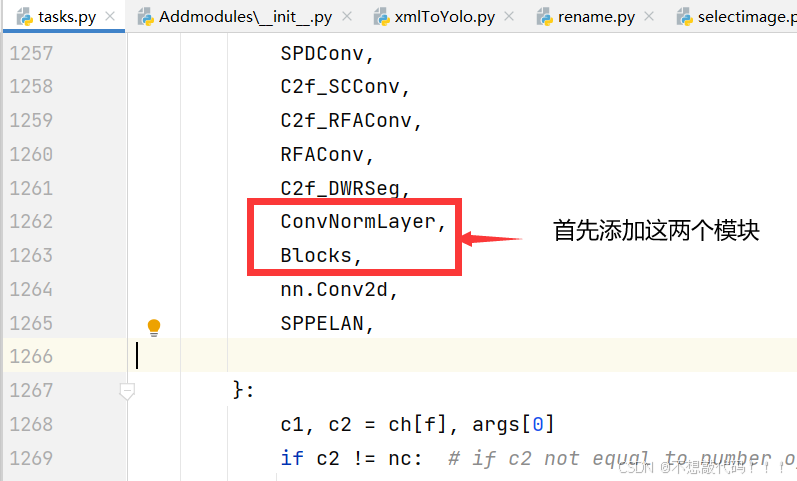

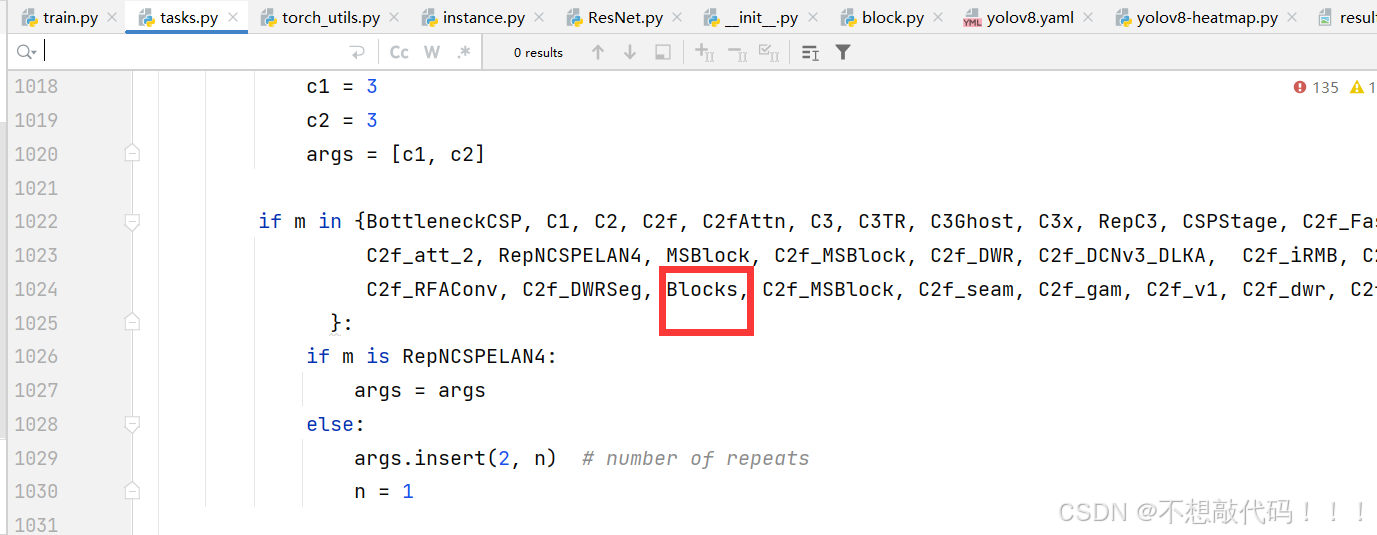

在task.py进行注册,即在parse_model添加代码

注意-共需要在三个位置修改

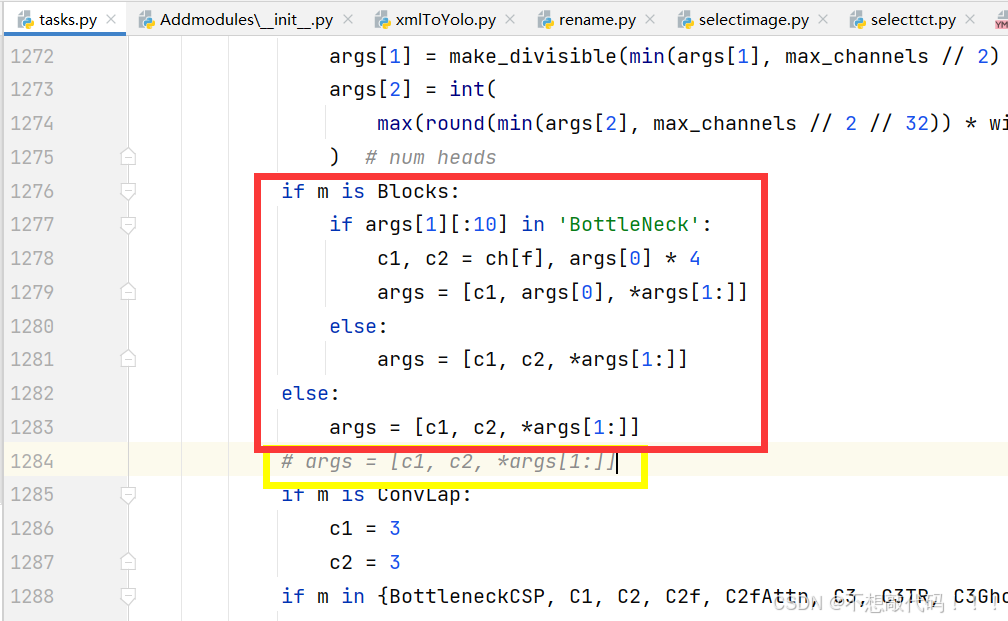

然后如下图所示,注释掉黄色框内代码,添加红色框内代码

到此注册成功,复制后面的yaml文件直接运行即可

yaml文件1-ResNet18

# Ultralytics YOLO ?, AGPL-3.0 license# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect # Parametersnc: 80 # number of classesscales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n' # [depth, width, max_channels] n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs # YOLOv8.0n backbonebackbone: # [from, repeats, module, args] - [-1, 1, ConvNormLayer, [32, 3, 2, 1, 'relu']] # 0-P1 - [-1, 1, ConvNormLayer, [32, 3, 1, 1, 'relu']] # 1 - [-1, 1, ConvNormLayer, [64, 3, 1, 1, 'relu']] # 2 - [-1, 1, nn.MaxPool2d, [3, 2, 1]] # 3-P2 - [-1, 2, Blocks, [64, BasicBlock, 2, False]] # 4 - [-1, 2, Blocks, [128, BasicBlock, 3, False]] # 5-P3 - [-1, 2, Blocks, [256, BasicBlock, 4, False]] # 6-P4 - [-1, 2, Blocks, [512, BasicBlock, 5, False]] # 7-P5 - [-1, 1, SPPF, [1024, 5]] # 8 # YOLOv8.0n headhead: - [-1, 1, nn.Upsample, [None, 2, 'nearest']] - [[-1, 6], 1, Concat, [1]] # cat backbone P4 - [-1, 3, C2f, [512]] # 11 - [-1, 1, nn.Upsample, [None, 2, 'nearest']] - [[-1, 5], 1, Concat, [1]] # cat backbone P3 - [-1, 3, C2f, [256]] # 14 (P3/8-small) - [-1, 1, Conv, [256, 3, 2]] - [[-1, 11], 1, Concat, [1]] # cat head P4 - [-1, 3, C2f, [512]] # 17 (P4/16-medium) - [-1, 1, Conv, [512, 3, 2]] - [[-1, 8], 1, Concat, [1]] # cat head P5 - [-1, 3, C2f, [1024]] # 20 (P5/32-large) - [[14, 17, 20], 1, Detect, [nc]] # Detect(P3, P4, P5)yaml文件2-ResNet34

# Ultralytics YOLO ?, AGPL-3.0 license# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect # Parametersnc: 80 # number of classesscales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n' # [depth, width, max_channels] n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs # YOLOv8.0n backbonebackbone: # [from, repeats, module, args] - [-1, 1, ConvNormLayer, [32, 3, 2, 1, 'relu']] # 0-P1 - [-1, 1, ConvNormLayer, [32, 3, 1, 1, 'relu']] # 1 - [-1, 1, ConvNormLayer, [64, 3, 1, 1, 'relu']] # 2 - [-1, 1, nn.MaxPool2d, [3, 2, 1]] # 3-P2 - [-1, 3, Blocks, [64, BasicBlock, 2, False]] # 4 - [-1, 4, Blocks, [128, BasicBlock, 3, False]] # 5-P3 - [-1, 6, Blocks, [256, BasicBlock, 4, False]] # 6-P4 - [-1, 3, Blocks, [512, BasicBlock, 5, False]] # 7-P5 - [-1, 1, SPPF, [1024, 5]] # 8 # YOLOv8.0n headhead: - [-1, 1, nn.Upsample, [None, 2, 'nearest']] - [[-1, 6], 1, Concat, [1]] # cat backbone P4 - [-1, 3, C2f, [512]] # 11 - [-1, 1, nn.Upsample, [None, 2, 'nearest']] - [[-1, 5], 1, Concat, [1]] # cat backbone P3 - [-1, 3, C2f, [256]] # 14 (P3/8-small) - [-1, 1, Conv, [256, 3, 2]] - [[-1, 11], 1, Concat, [1]] # cat head P4 - [-1, 3, C2f, [512]] # 17 (P4/16-medium) - [-1, 1, Conv, [512, 3, 2]] - [[-1, 8], 1, Concat, [1]] # cat head P5 - [-1, 3, C2f, [1024]] # 20 (P5/32-large) - [[14, 17, 20], 1, Detect, [nc]] # Detect(P3, P4, P5)yaml文件3-ResNet50

# Ultralytics YOLO ?, AGPL-3.0 license# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect # Parametersnc: 80 # number of classesscales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n' # [depth, width, max_channels] n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs # YOLOv8.0n backbonebackbone: # [from, repeats, module, args] - [-1, 1, ConvNormLayer, [32, 3, 2, 1, 'relu']] # 0-P1 - [-1, 1, ConvNormLayer, [32, 3, 1, 1, 'relu']] # 1 - [-1, 1, ConvNormLayer, [64, 3, 1, 1, 'relu']] # 2 - [-1, 1, nn.MaxPool2d, [3, 2, 1]] # 3-P2 - [-1, 3, Blocks, [64, BasicBlock, 2, False]] # 4 - [-1, 4, Blocks, [128, BasicBlock, 3, False]] # 5-P3 - [-1, 6, Blocks, [256, BasicBlock, 4, False]] # 6-P4 - [-1, 3, Blocks, [512, BasicBlock, 5, False]] # 7-P5 - [-1, 1, SPPF, [1024, 5]] # 8 # YOLOv8.0n headhead: - [-1, 1, nn.Upsample, [None, 2, 'nearest']] - [[-1, 6], 1, Concat, [1]] # cat backbone P4 - [-1, 3, C2f, [512]] # 11 - [-1, 1, nn.Upsample, [None, 2, 'nearest']] - [[-1, 5], 1, Concat, [1]] # cat backbone P3 - [-1, 3, C2f, [256]] # 14 (P3/8-small) - [-1, 1, Conv, [256, 3, 2]] - [[-1, 11], 1, Concat, [1]] # cat head P4 - [-1, 3, C2f, [512]] # 17 (P4/16-medium) - [-1, 1, Conv, [512, 3, 2]] - [[-1, 8], 1, Concat, [1]] # cat head P5 - [-1, 3, C2f, [1024]] # 20 (P5/32-large) - [[14, 17, 20], 1, Detect, [nc]] # Detect(P3, P4, P5)yaml文件4-ResNet101

# Ultralytics YOLO ?, AGPL-3.0 license# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect # Parametersnc: 80 # number of classesscales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n' # [depth, width, max_channels] n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs # YOLOv8.0n backbonebackbone: # [from, repeats, module, args] - [-1, 1, ConvNormLayer, [32, 3, 2, 1, 'relu']] # 0-P1 - [-1, 1, ConvNormLayer, [32, 3, 1, 1, 'relu']] # 1 - [-1, 1, ConvNormLayer, [64, 3, 1, 1, 'relu']] # 2 - [-1, 1, nn.MaxPool2d, [3, 2, 1]] # 3-P2 - [-1, 3, Blocks, [64, BasicBlock, 2, False]] # 4 - [-1, 4, Blocks, [128, BasicBlock, 3, False]] # 5-P3 - [-1, 23, Blocks, [256, BasicBlock, 4, False]] # 6-P4 - [-1, 3, Blocks, [512, BasicBlock, 5, False]] # 7-P5 - [-1, 1, SPPF, [1024, 5]] # 8 # YOLOv8.0n headhead: - [-1, 1, nn.Upsample, [None, 2, 'nearest']] - [[-1, 6], 1, Concat, [1]] # cat backbone P4 - [-1, 3, C2f, [512]] # 11 - [-1, 1, nn.Upsample, [None, 2, 'nearest']] - [[-1, 5], 1, Concat, [1]] # cat backbone P3 - [-1, 3, C2f, [256]] # 14 (P3/8-small) - [-1, 1, Conv, [256, 3, 2]] - [[-1, 11], 1, Concat, [1]] # cat head P4 - [-1, 3, C2f, [512]] # 17 (P4/16-medium) - [-1, 1, Conv, [512, 3, 2]] - [[-1, 8], 1, Concat, [1]] # cat head P5 - [-1, 3, C2f, [1024]] # 20 (P5/32-large) - [[14, 17, 20], 1, Detect, [nc]] # Detect(P3, P4, P5)不知不觉已经看完了哦,动动小手留个点赞吧--_--