k8s1.26+containerd安装-单机master

1.机器

| ip | hostname |

|---|---|

| 192.168.137.133 | k8smaster |

| 192.168.137.132 | k8snode1 |

| 192.168.137.134 | k8snode2 |

1.1机器初始化操作

每个机器设置对应的hostname,并查看

hostnamectl set-hostname k8smaster hostname在master机器配置host文件

echo '''192.168.137.131 k8smaster192.168.137.129 k8snode1192.168.137.131 k8snode2''' >> /etc/hosts每台机器都设置 转发 IPv4 并让 iptables 看到桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.confoverlaybr_netfilterEOFsudo modprobe overlaysudo modprobe br_netfilter# 设置所需的 sysctl 参数,参数在重新启动后保持不变cat <<EOF | sudo tee /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1net.ipv4.ip_forward = 1EOF# 应用 sysctl 参数而不重新启动sudo sysctl --system如果想要更好的网络性能就配置ipvs,自行在网上搜索。

每台机器都设置 时间同步

yum install chrony -ysystemctl start chronydsystemctl enable chronydchronyc sources每台机器 如果有防火墙关闭防火墙

systemctl stop firewalldsystemctl disable firewalld每台机器 关闭 swap

# 临时关闭;关闭swap主要是为了性能考虑swapoff -a# 可以通过这个命令查看swap是否关闭了free# 永久关闭sed -ri 's/.*swap.*/#&/' /etc/fstab每台机器 禁用 SELinux

# 临时关闭setenforce 0# 永久禁用sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config2.每台机器 安装containerd

# 添加docker源curl -L -o /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# 安装containerdyum install -y containerd.io# 创建默认配置文件containerd config default > /etc/containerd/config.toml# 设置aliyun地址,不设置会连接不上sed -i "s#registry.k8s.io/pause#registry.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml# 设置驱动为systemdsed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml# 设置dicker地址为aliyun镜像地址vi /etc/containerd/config.toml# 文件内容为 [plugins."io.containerd.grpc.v1.cri".registry] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://8aj710su.mirror.aliyuncs.com" ,"https://registry-1.docker.io"]# 重启服务systemctl daemon-reloadsystemctl enable --now containerdsystemctl restart containerd3.每台机器 安装kubelet kubeadm kubectl 和crictl工具

cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOFsetenforce 0# 安装crictl工具yum install -y cri-tools# 生成配置文件crictl config runtime-endpoint# 编辑配置文件cat << EOF | tee /etc/crictl.yamlruntime-endpoint: "unix:///run/containerd/containerd.sock"image-endpoint: "unix:///run/containerd/containerd.sock"timeout: 10debug: falsepull-image-on-create: falsedisable-pull-on-run: falseEOF# 安装kub软件yum install -y kubelet kubeadm kubectlsystemctl enable kubelet && systemctl start kubelet在master执行初始化

kubeadm init \ --apiserver-advertise-address=192.168.137.131 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.26.0 \ --service-cidr=10.96.0.0/12 \ --pod-network-cidr=10.244.0.0/16 \ --ignore-preflight-errors=all如果kubelet启动失败查看启动文件

cat /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.confcat /var/lib/kubelet/kubeadm-flags.env如果初始化出错重置命令

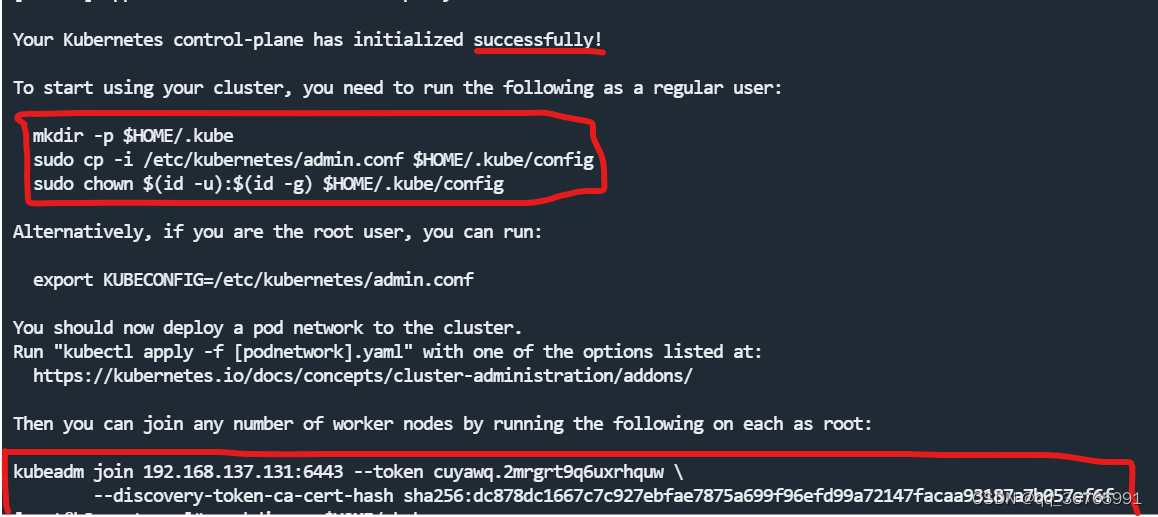

kubeadm resetrm -fr ~/.kube/ /etc/kubernetes/* var/lib/etcd/*出现如图表示成功

然后执行第一个红框语句

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config在node节点机器执行第二个语句

kubeadm join 192.168.137.131:6443 --token cuyawq.2mrgrt9q6uxrhquw \ --discovery-token-ca-cert-hash sha256:dc878dc1667c7c927ebfae7875a699f96efd99a72147facaa93187a7b057ef6f在master配置CNI

cat > kube-flannel.yaml << EOF---kind: NamespaceapiVersion: v1metadata: name: kube-flannel labels: pod-security.kubernetes.io/enforce: privileged---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: flannelrules:- apiGroups: - "" resources: - pods verbs: - get- apiGroups: - "" resources: - nodes verbs: - list - watch- apiGroups: - "" resources: - nodes/status verbs: - patch---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: flannelroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannelsubjects:- kind: ServiceAccount name: flannel namespace: kube-flannel---apiVersion: v1kind: ServiceAccountmetadata: name: flannel namespace: kube-flannel---kind: ConfigMapapiVersion: v1metadata: name: kube-flannel-cfg namespace: kube-flannel labels: tier: node app: flanneldata: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } }---apiVersion: apps/v1kind: DaemonSetmetadata: name: kube-flannel-ds namespace: kube-flannel labels: tier: node app: flannelspec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux hostNetwork: true priorityClassName: system-node-critical tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni-plugin #image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply) image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 command: - cp args: - -f - /flannel - /opt/cni/bin/flannel volumeMounts: - name: cni-plugin mountPath: /opt/cni/bin - name: install-cni #image: flannelcni/flannel:v0.19.0 for ppc64le and mips64le (dockerhub limitations may apply) image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.0 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel #image: flannelcni/flannel:v0.19.0 for ppc64le and mips64le (dockerhub limitations may apply) image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.0 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN", "NET_RAW"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: EVENT_QUEUE_DEPTH value: "5000" volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ - name: xtables-lock mountPath: /run/xtables.lock volumes: - name: run hostPath: path: /run/flannel - name: cni-plugin hostPath: path: /opt/cni/bin - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg - name: xtables-lock hostPath: path: /run/xtables.lock type: FileOrCreateEOFkubectl apply -f kube-flannel.yml添加之后执行命令查看是否添加成功

kubectl get nodekubectl get pods -n kube-system测试创建容器

kubectl create deployment nginx --image=nginxkubectl expose deployment nginx --port=80 --type=NodePort# 查看kubectl get pod,svc使用任意一个node节点ip访问查询出来的端口