1、ymal文件修改

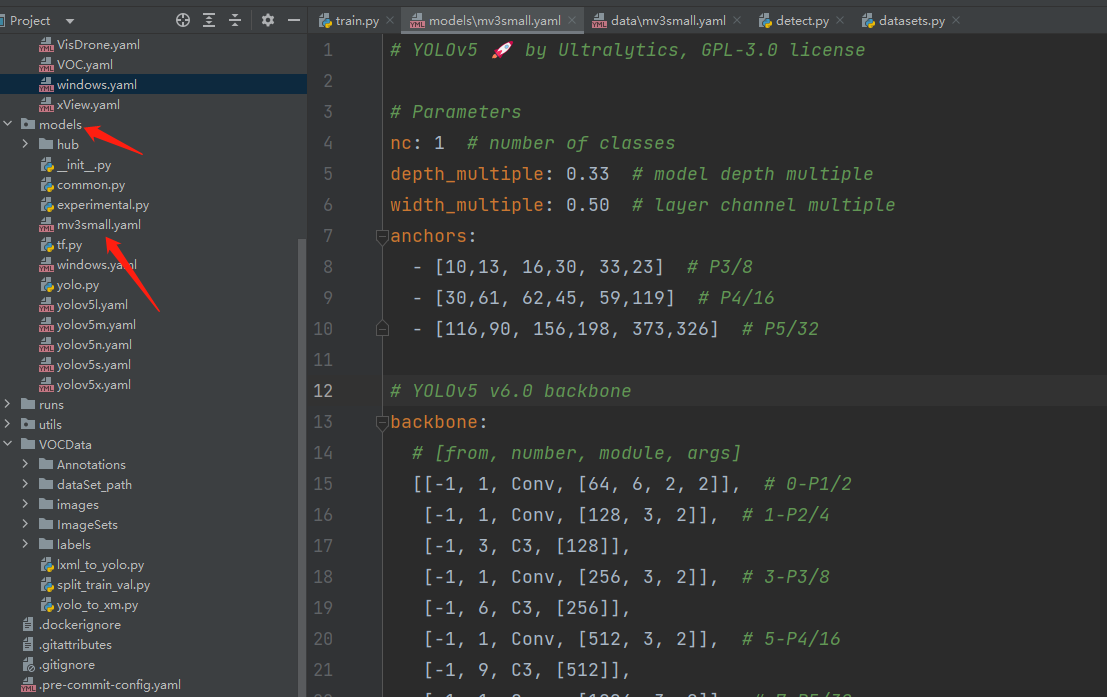

将models文件下yolov5s.py复制重命名如下图所示:

2、接着将如下代码替换,diamagnetic如下所示:

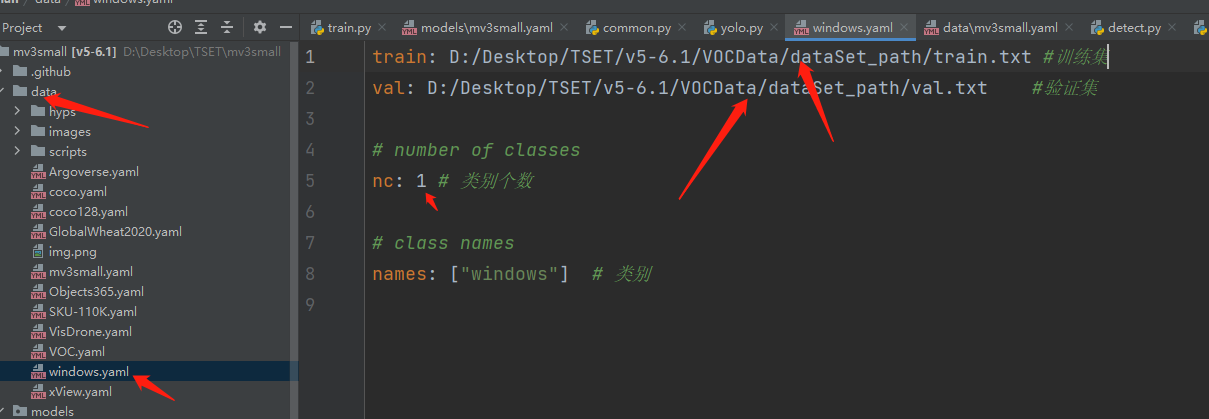

# YOLOv5 ? by Ultralytics, GPL-3.0 license# Parametersnc: 1 # number of classesdepth_multiple: 1.0 # model depth multiplewidth_multiple: 1.0 # layer channel multipleanchors: - [10,13, 16,30, 33,23] # P3/8 - [30,61, 62,45, 59,119] # P4/16 - [116,90, 156,198, 373,326] # P5/32 # Mobilenetv3-small backbone # MobileNetV3_InvertedResidual [out_ch, hid_ch, k_s, stride, SE, HardSwish]backbone: # [from, number, module, args] [[-1, 1, Conv_BN_HSwish, [16, 2]], # 0-p1/2 [-1, 1, MobileNetV3_InvertedResidual, [16, 16, 3, 2, 1, 0]], # 1-p2/4 [-1, 1, MobileNetV3_InvertedResidual, [24, 72, 3, 2, 0, 0]], # 2-p3/8 [-1, 1, MobileNetV3_InvertedResidual, [24, 88, 3, 1, 0, 0]], # 3 [-1, 1, MobileNetV3_InvertedResidual, [40, 96, 5, 2, 1, 1]], # 4-p4/16 [-1, 1, MobileNetV3_InvertedResidual, [40, 240, 5, 1, 1, 1]], # 5 [-1, 1, MobileNetV3_InvertedResidual, [40, 240, 5, 1, 1, 1]], # 6 [-1, 1, MobileNetV3_InvertedResidual, [48, 120, 5, 1, 1, 1]], # 7 [-1, 1, MobileNetV3_InvertedResidual, [48, 144, 5, 1, 1, 1]], # 8 [-1, 1, MobileNetV3_InvertedResidual, [96, 288, 5, 2, 1, 1]], # 9-p5/32 [-1, 1, MobileNetV3_InvertedResidual, [96, 576, 5, 1, 1, 1]], # 10 [-1, 1, MobileNetV3_InvertedResidual, [96, 576, 5, 1, 1, 1]], # 11 ]# YOLOv5 v6.0 headhead: [[-1, 1, Conv, [96, 1, 1]], # 12 [-1, 1, nn.Upsample, [None, 2, 'nearest']], [[-1, 8], 1, Concat, [1]], # cat backbone P4 [-1, 3, C3, [144, False]], # 15 [-1, 1, Conv, [144, 1, 1]], # 16 [-1, 1, nn.Upsample, [None, 2, 'nearest']], [[-1, 3], 1, Concat, [1]], # cat backbone P3 [-1, 3, C3, [168, False]], # 19 (P3/8-small) [-1, 1, Conv, [168, 3, 2]], [[-1, 16], 1, Concat, [1]], # cat head P4 [-1, 3, C3, [312, False]], # 22 (P4/16-medium) [-1, 1, Conv, [312, 3, 2]], [[-1, 12], 1, Concat, [1]], # cat head P5 [-1, 3, C3, [408, False]], # 25 (P5/32-large) [[19, 22, 25], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5) ]data文件也类似操作,如下图所示:

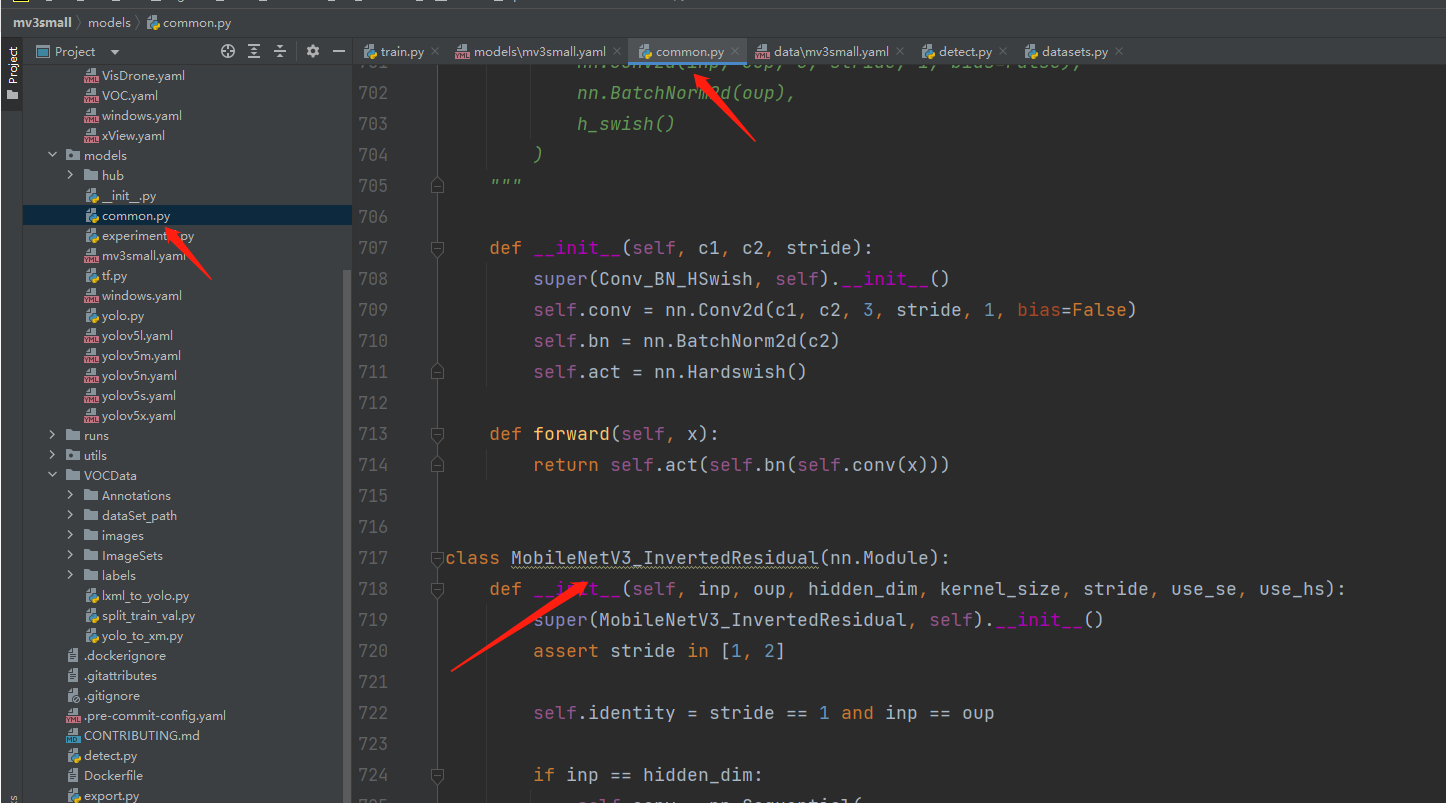

2、common.py文件修改

在common.py文件下方中加入如下代码:

# Mobilenetv3Smallclass SeBlock(nn.Module): def __init__(self, in_channel, reduction=4): super().__init__() self.Squeeze = nn.AdaptiveAvgPool2d(1) self.Excitation = nn.Sequential() self.Excitation.add_module('FC1', nn.Conv2d(in_channel, in_channel // reduction, kernel_size=1)) # 1*1卷积与此效果相同 self.Excitation.add_module('ReLU', nn.ReLU()) self.Excitation.add_module('FC2', nn.Conv2d(in_channel // reduction, in_channel, kernel_size=1)) self.Excitation.add_module('Sigmoid', nn.Sigmoid()) def forward(self, x): y = self.Squeeze(x) ouput = self.Excitation(y) return x * (ouput.expand_as(x))class Conv_BN_HSwish(nn.Module): """ This equals to def conv_3x3_bn(inp, oup, stride): return nn.Sequential( nn.Conv2d(inp, oup, 3, stride, 1, bias=False), nn.BatchNorm2d(oup), h_swish() ) """ def __init__(self, c1, c2, stride): super(Conv_BN_HSwish, self).__init__() self.conv = nn.Conv2d(c1, c2, 3, stride, 1, bias=False) self.bn = nn.BatchNorm2d(c2) self.act = nn.Hardswish() def forward(self, x): return self.act(self.bn(self.conv(x)))class MobileNetV3_InvertedResidual(nn.Module): def __init__(self, inp, oup, hidden_dim, kernel_size, stride, use_se, use_hs): super(MobileNetV3_InvertedResidual, self).__init__() assert stride in [1, 2] self.identity = stride == 1 and inp == oup if inp == hidden_dim: self.conv = nn.Sequential( # dw nn.Conv2d(hidden_dim, hidden_dim, kernel_size, stride, (kernel_size - 1) // 2, groups=hidden_dim, bias=False), nn.BatchNorm2d(hidden_dim), nn.Hardswish() if use_hs else nn.ReLU(), # Squeeze-and-Excite SeBlock(hidden_dim) if use_se else nn.Sequential(), # pw-linear nn.Conv2d(hidden_dim, oup, 1, 1, 0, bias=False), nn.BatchNorm2d(oup), ) else: self.conv = nn.Sequential( # pw nn.Conv2d(inp, hidden_dim, 1, 1, 0, bias=False), nn.BatchNorm2d(hidden_dim), nn.Hardswish() if use_hs else nn.ReLU(), # dw nn.Conv2d(hidden_dim, hidden_dim, kernel_size, stride, (kernel_size - 1) // 2, groups=hidden_dim, bias=False), nn.BatchNorm2d(hidden_dim), # Squeeze-and-Excite SeBlock(hidden_dim) if use_se else nn.Sequential(), nn.Hardswish() if use_hs else nn.ReLU(), # pw-linear nn.Conv2d(hidden_dim, oup, 1, 1, 0, bias=False), nn.BatchNorm2d(oup), ) def forward(self, x): y = self.conv(x) if self.identity: return x + y else: return y

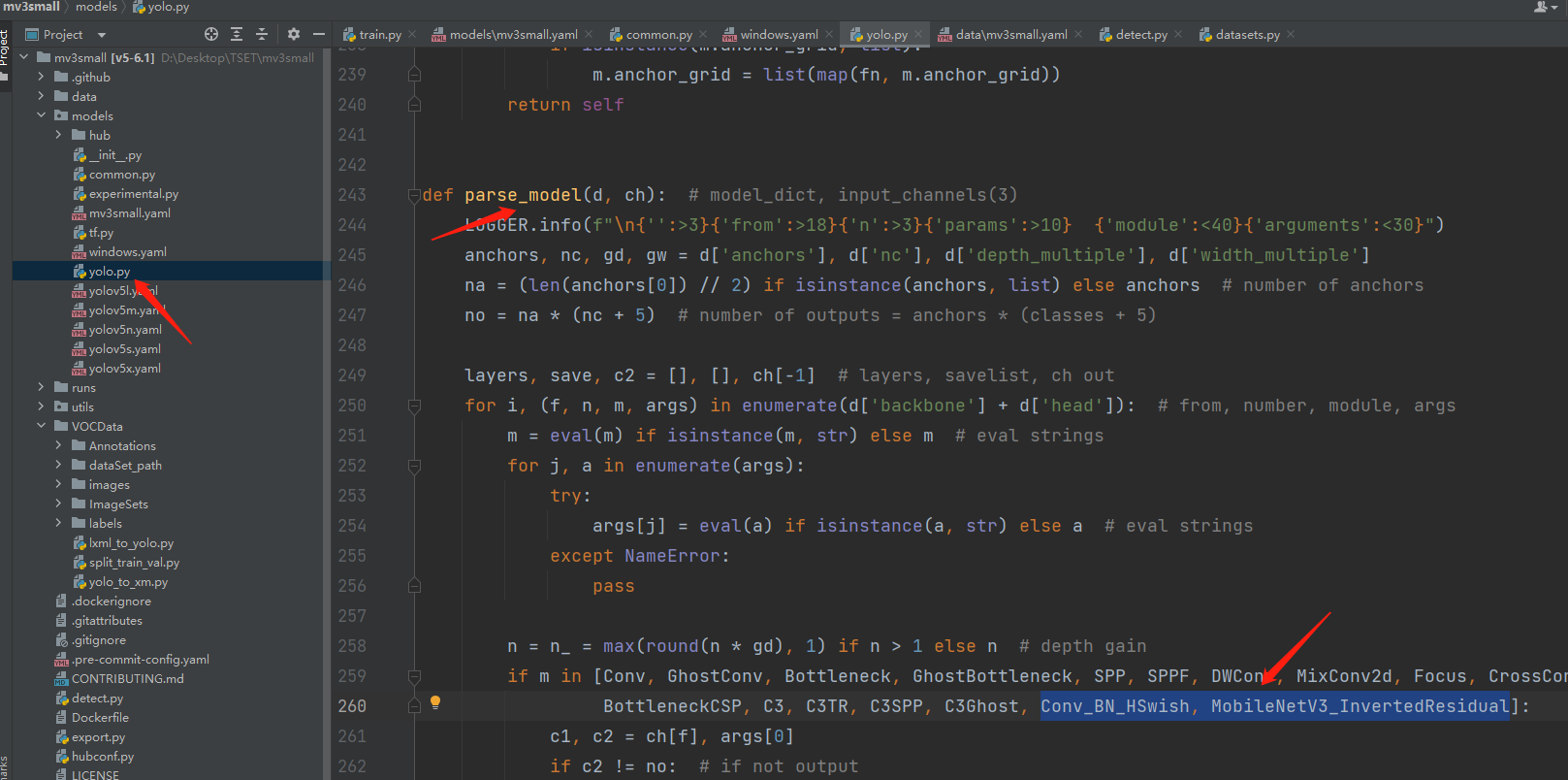

3、yolo.py文件修改

4、在yolo.py的parse_model函数中添加如下代码

Conv_BN_HSwish, MobileNetV3_InvertedResidual

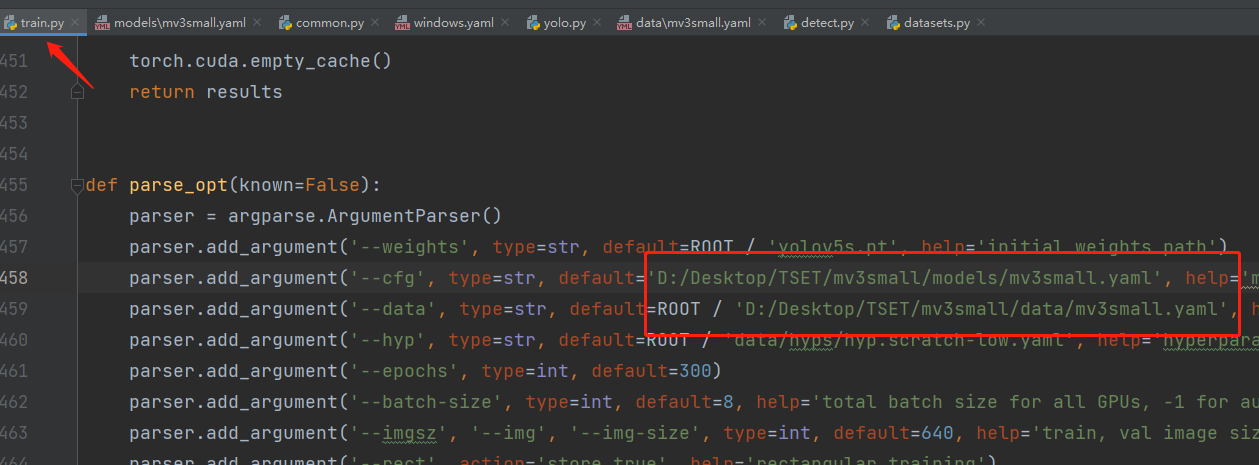

4、train文件修改

在train文件进行如下路径修改,如下图所示:

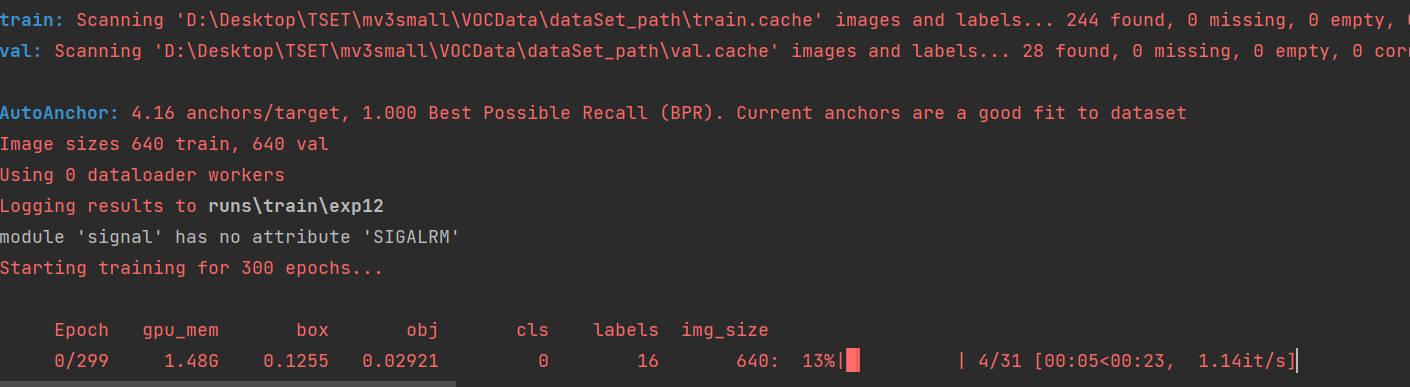

接着对train.py运行训练,如下图所示:

上文如有错误,恳请各位大佬指正。