虚拟机搭建kubernetes集群环境

1、kubernetes的组织架构2、kubernetes的集群部署模型3、k8s安装的环境准备4 、安装kubelet、kubeadm、kubectl5、使用kubeadm引导集群5.1 下载机器所需要的镜像容器5.2 初始化主节点5.2.1 设置.kube/config5.2.2 安装网络组件5.2.3 修改calico.yaml地址5.2.4 检查k8s启动的pod5.2.5 主节点启动成功 5.3 加入worker节点 6、安装可视化界面dashboard6.1 部署dashboard6.3 访问dashboard6.4 创建访问账号6.4 令牌访问6.5 界面输入令牌并访问 7、过程中报错7.1 CPU核数小于最低要求2C(内存也必须在2G以上)7.2 Calico设置桥接数7.3 Calico版本和kubernetes版本不兼容7.4 calico更改配置后,k8s配置未更新

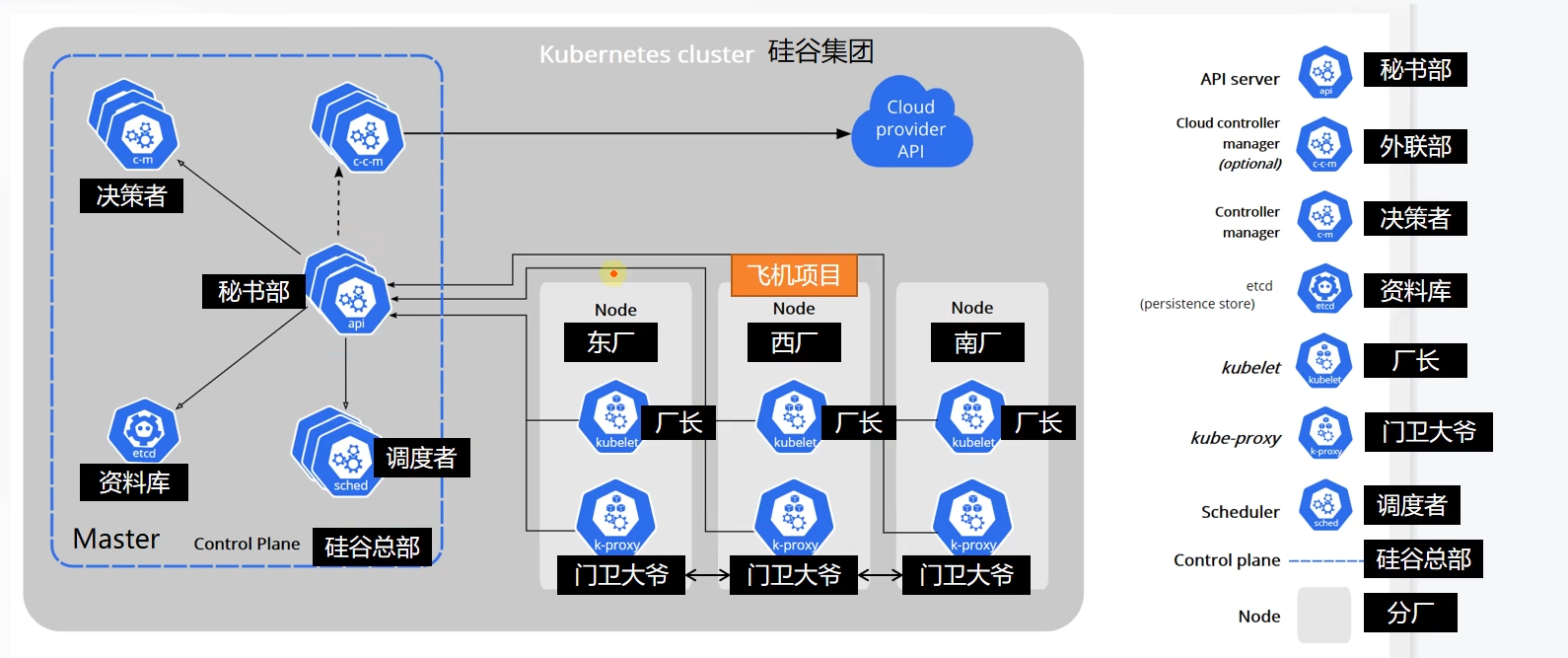

1、kubernetes的组织架构

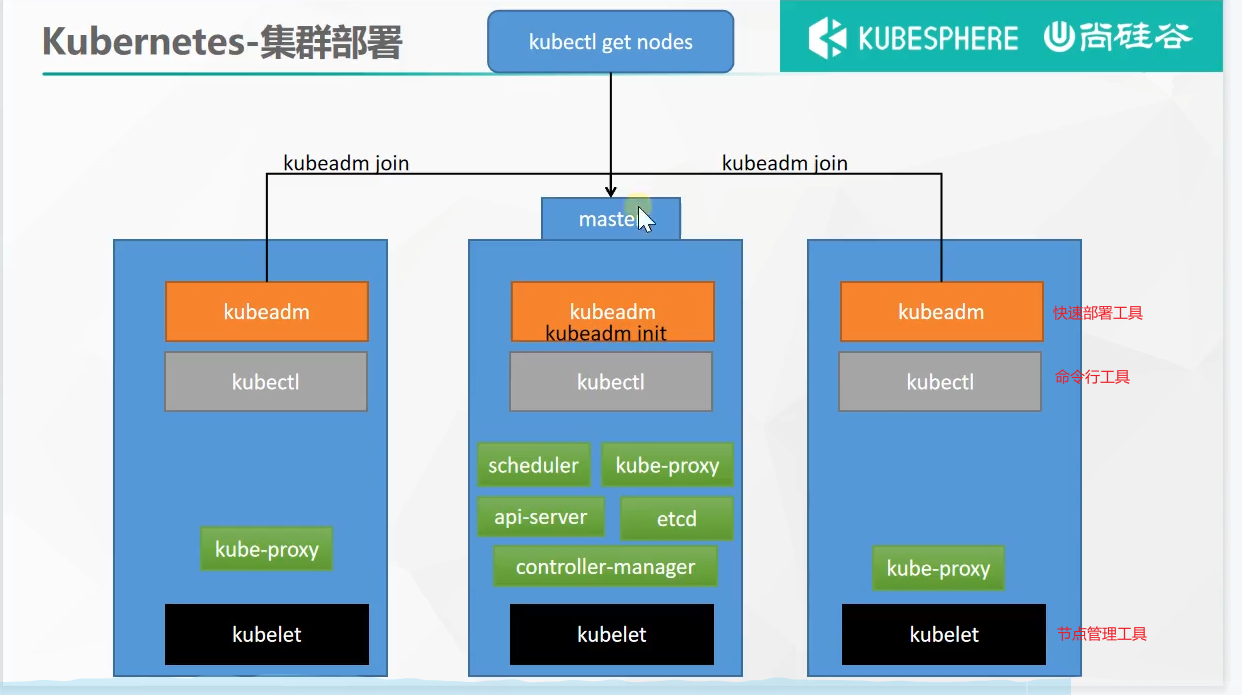

2、kubernetes的集群部署模型

3、k8s安装的环境准备

我这里是自己本地装了3台虚拟机,需要注意k8s对虚拟机的配置有强制要求。

划重点:

#1、设置主机名(主机名不得重复)#centos设置方式hostnamectl set-hostname k8s-masterhostnamectl set-hostname k8s-node1hostnamectl set-hostname k8s-node2#2、将SELinux 设置为 permissive 模式(相当于将其禁用)sudo setenforce 0sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config#3、关闭swapswapoff -a sed -ri 's/.*swap.*/#&/' /etc/fstab#4、允许 iptables 检查桥接流量 将ipv6转至ipv4cat <<EOF | sudo tee /etc/modules-load.d/k8s.confbr_netfilterEOF cat <<EOF | sudo tee /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOFsudo sysctl --system4 、安装kubelet、kubeadm、kubectl

#设置安装镜像地址cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgexclude=kubelet kubeadm kubectlEOF#安装三组件sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetessudo systemctl enable --now kubelet#检查kubelet(厂长)运行状态systemctl status kubelet5、使用kubeadm引导集群

5.1 下载机器所需要的镜像容器

#下载k8s内部组件(每台机器都执行)sudo tee ./images.sh <<-'EOF'#!/bin/bashimages=(kube-apiserver:v1.20.9kube-proxy:v1.20.9kube-controller-manager:v1.20.9kube-scheduler:v1.20.9coredns:1.7.0etcd:3.4.13-0pause:3.2)for imageName in ${images[@]} ; dodocker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageNamedoneEOF#给images.sh文件赋权限 chmod +x ./images.sh && ./images.sh5.2 初始化主节点

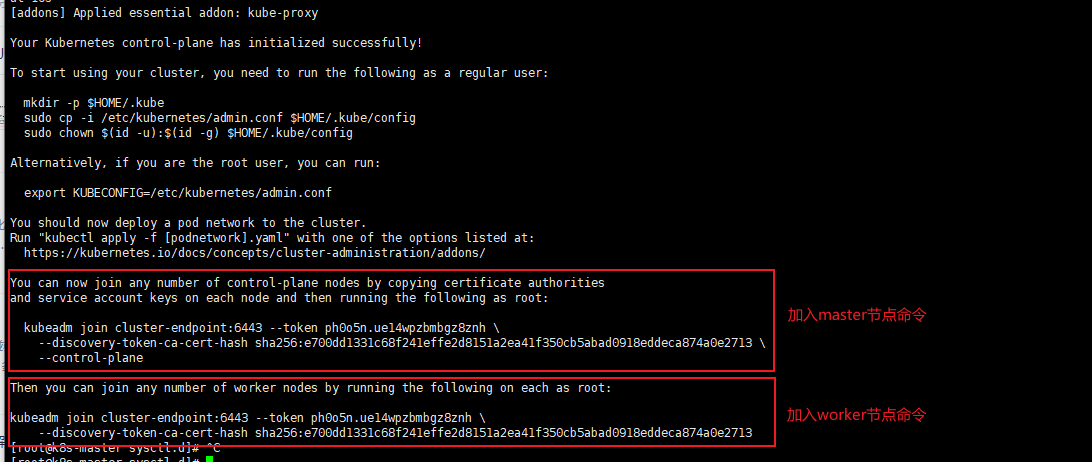

#所有机器添加master域名映射,以下需要修改为自己的#告诉k8s集群谁是master节点echo "192.168.149.128 cluster-endpoint" >> /etc/hosts#主节点初始化(kubeadm reset)kubeadm init \--apiserver-advertise-address=192.168.149.128 \--control-plane-endpoint=cluster-endpoint \--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \--kubernetes-version v1.20.9 \--service-cidr=10.96.0.0/16 \--pod-network-cidr=172.16.0.0/16#--pod-network-cidr=原始内容是192.168.0.0/16(16是掩码,配置可用子网网段数)#下面是在网上看到的伪大神提供的虚拟机配置calocl的解决方案,无效大神最终用了Flannel作为网路组件(特此吐槽)--pod-network-cidr=192.168.149.1/16(IP地址与宿主机地址重复)#所有网络范围不重叠下图是初始化成功的界面,初始化失败需要kubeadm reset(切记,后文踩坑记录还有详情)

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!#1、开启集群前,需要配置再主节点设置常规配置To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf #2、添加网络插件You should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/#3、添加master节点You can now join any number of control-plane nodes by copying certificate authoritiesand service account keys on each node and then running the following as root: kubeadm join cluster-endpoint:6443 --token xj69sq.eqwhu8s2h9ifrcq9 \ --discovery-token-ca-cert-hash sha256:2aae2083882147c6e56f877b7d6bf8419c7d2225b96b45509dce89b3fb80cf57 \ --control-plane #4、添加worker节点 Then you can join any number of worker nodes by running the following on each as root:kubeadm join cluster-endpoint:6443 --token xj69sq.eqwhu8s2h9ifrcq9 \ --discovery-token-ca-cert-hash sha256:2aae2083882147c6e56f877b7d6bf8419c7d2225b96b45509dce89b3fb80cf575.2.1 设置.kube/config

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config5.2.2 安装网络组件

https://kubernetes.io/docs/concepts/cluster-administration/addons/ [可选的网络组件]

https://projectcalico.docs.tigera.io/about/about-calico [calico]

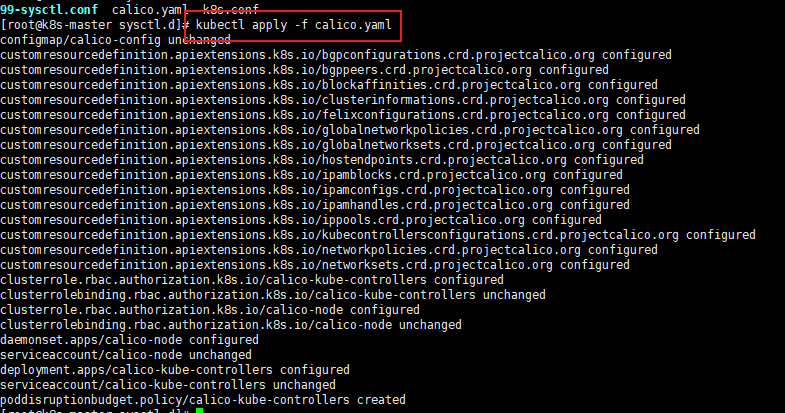

#添加网络插件的命令#这里是坑,详情看踩坑下文 #curl https://docs.projectcalico.org/manifests/calico.yaml -Ocurl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -Okubectl apply -f calico.yaml

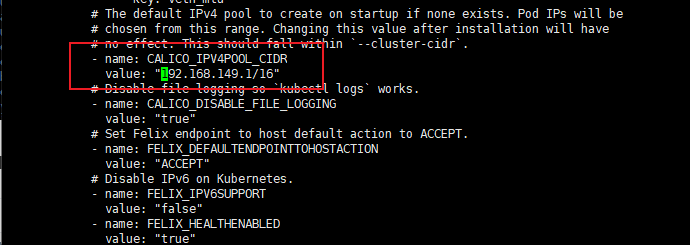

5.2.3 修改calico.yaml地址

这里就是前文将的本地虚拟机ip和默认的calico配置地址网段重复,需要另设网段

vi calico.yaml#由于在kubeadm做初始化时,#将--pod-network-cidr地址从192.168.0.0/16修改为172.16.0.0/16#现在需要在calico地址同样修改为改地址172.16.0.0/16kubectl apply -f calico.yaml

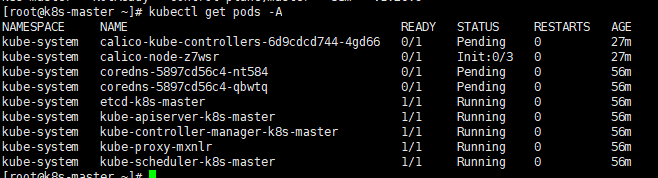

5.2.4 检查k8s启动的pod

#查看集群所有节点kubectl get nodes#根据配置文件,给集群创建资源kubectl apply -f xxxx.yaml#查看集群部署了哪些应用? 运行中的应用在docker里面叫容器,在k8s里面叫Poddocker ps (docker)kubectl get pods -A (k8s)

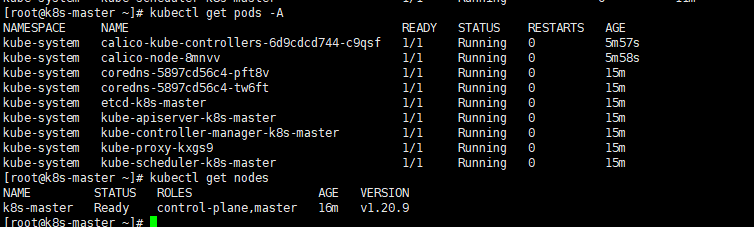

5.2.5 主节点启动成功

5.3 加入worker节点

#将前述工作节点命令在子节点执行(命令24h内有效) Then you can join any number of worker nodes by running the following on each as root:kubeadm join cluster-endpoint:6443 --token xj69sq.eqwhu8s2h9ifrcq9 \ --discovery-token-ca-cert-hash sha256:2aae2083882147c6e56f877b7d6bf8419c7d2225b96b45509dce89b3fb80cf57 #当令牌过期后,在master执行以下命令重新获取令牌kubeadm token create --print-join-command6、安装可视化界面dashboard

6.1 部署dashboard

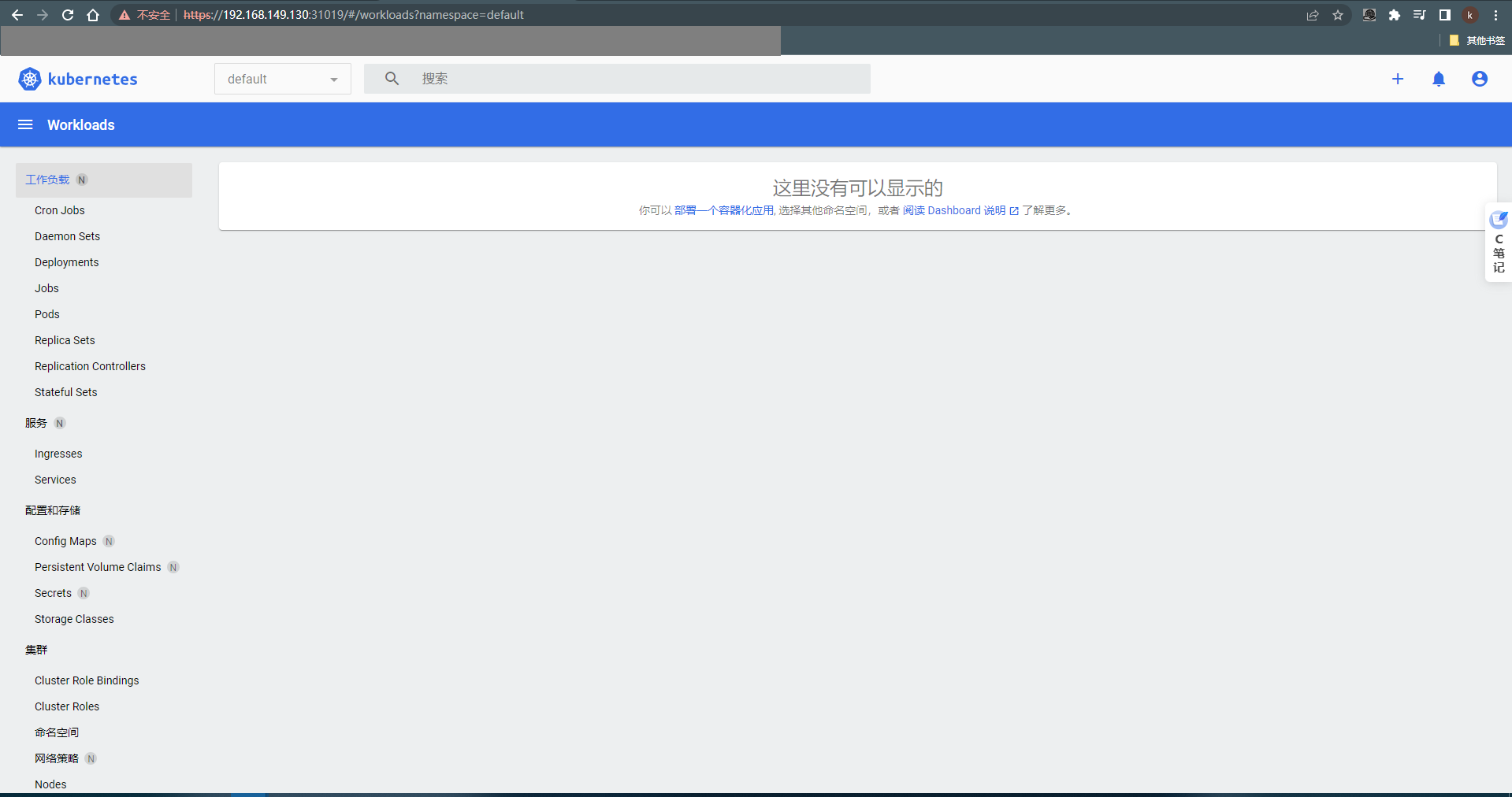

//kubernetes官方提供的可视化界面//https://github.com/kubernetes/dashboardkubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml将yaml中的type:参数从ClusterIP 修改为NodePort

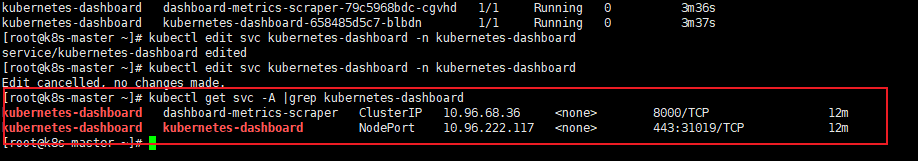

spec: clusterIP: 10.96.222.117 clusterIPs: - 10.96.222.117 externalTrafficPolicy: Cluster ports: - nodePort: 31019 port: 443 protocol: TCP targetPort: 8443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type: NodePort暴露可视化界面的端口

//[注意需要开放下图31019的端口]kubectl get svc -A |grep kubernetes-dashboard

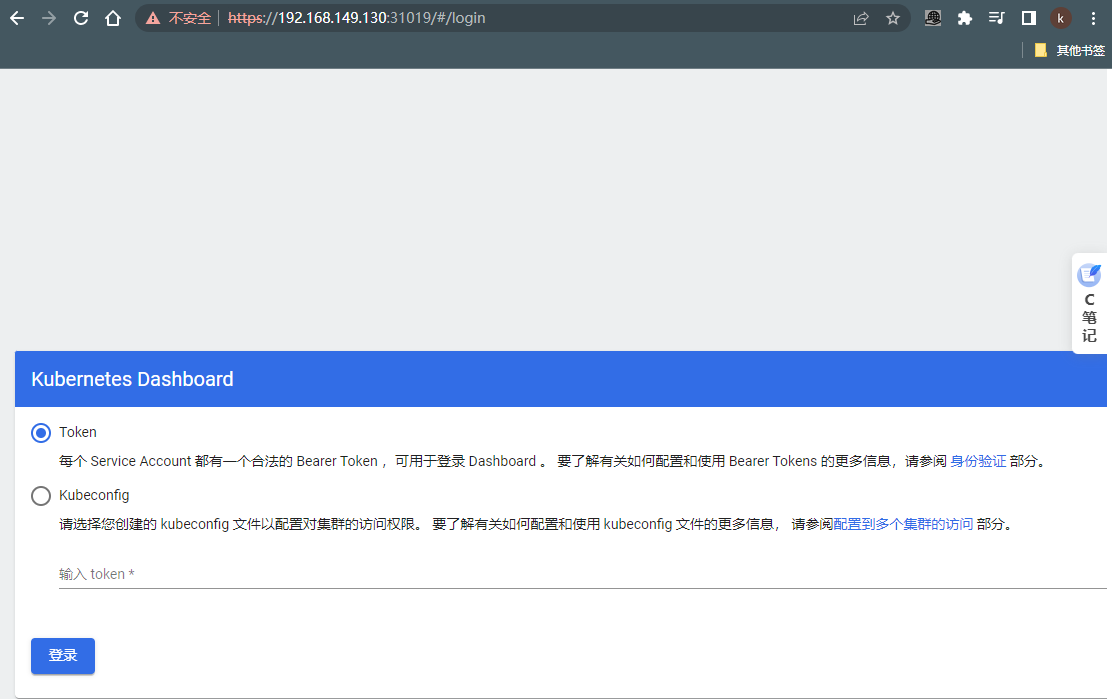

6.3 访问dashboard

使用k8s集群的任意节点+port即可访问

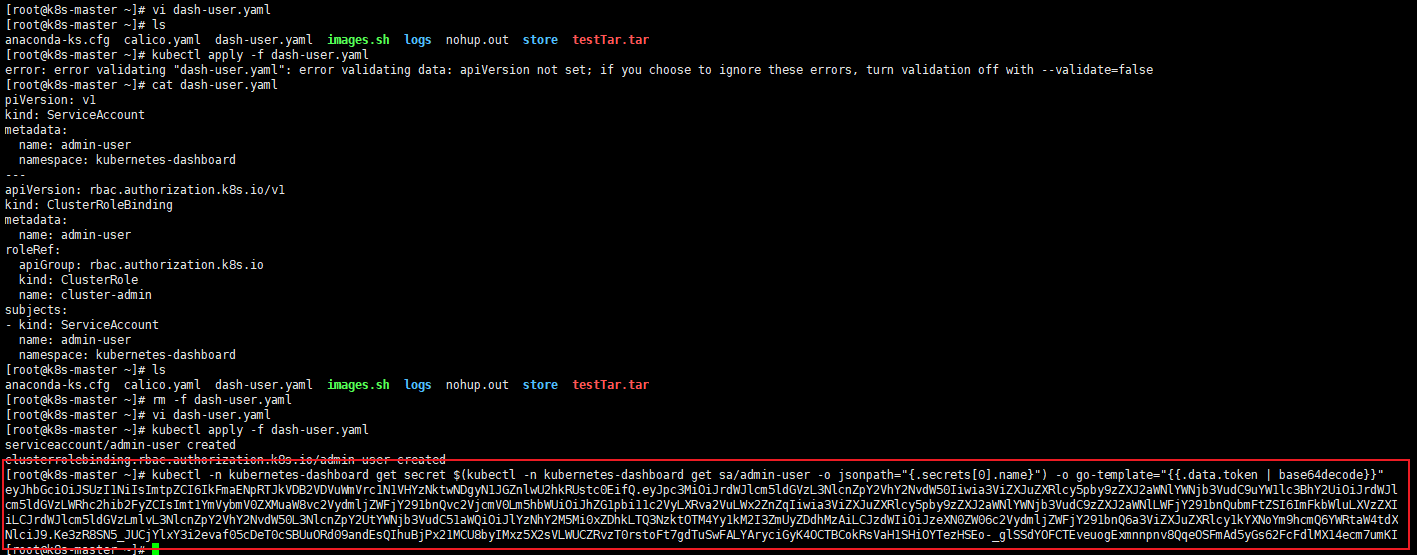

6.4 创建访问账号

由于6.3节中,需要创建Token令牌才能真正的登录,故需执行以下命令

vi dash.yaml#创建访问账号,准备一个yaml文件; vi dash.yamlapiVersion: v1kind: ServiceAccountmetadata: name: admin-user namespace: kubernetes-dashboard---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: admin-userroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-adminsubjects:- kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard执行

kubectl apply -f dash.yaml6.4 令牌访问

#获取访问令牌kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"令牌内容

eyJhbGciOiJSUzI1NiIsImtpZCI6IkFmaENpRTJkVDB2VDVuWmVrc1N1VHYzNktwNDgyN1JGZnlwU2hkRUstc0EifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWx2ZnZqIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJlYzNhY2M5Mi0xZDhkLTQ3NzktOTM4Yy1kM2I3ZmUyZDdhMzAiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.Ke3zR8SN5_JUCjYlxY3i2evaf05cDeT0cSBUuORd09andEsQIhuBjPx21MCU8byIMxz5X2sVLWUCZRvzT0rstoFt7gdTuSwFALYAryciGyK4OCTBCokRsVaH1SHiOYTezHSEo-_glSSdYOFCTEveuogExmnnpnv8QqeOSFmAd5yGs62FcFdlMX14ecm7umKIW2-se9E2PLLP3tDYCZxzM4ouGMFxf-uf1b_ruEg6K01En2xXP-QYA8YOhV7ogL3aUv7EKceiNw-tcpAcL_bX0D3zCgbZMYkB2sCjQ9UMpjD-VYbxPcapwsFmR4AchSrmK_qP5e5KdUx8D0G3nhXm5A提取令牌过程

6.5 界面输入令牌并访问

7、过程中报错

注意:

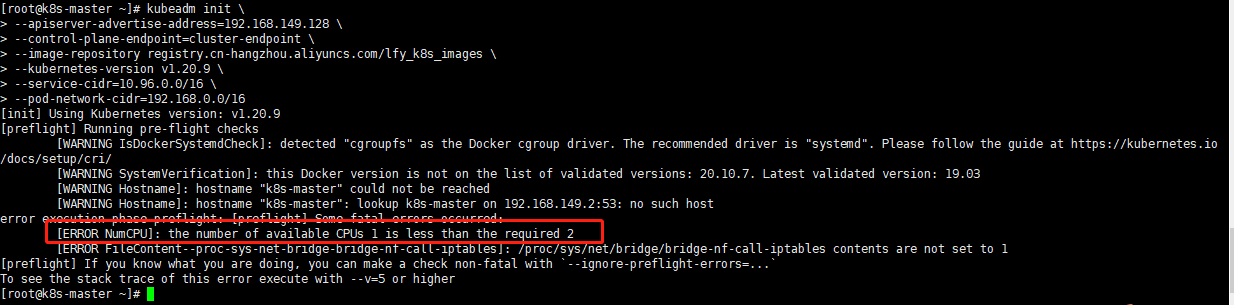

kubeadm init\ 执行失败或者强制终止后,需要先执行kubeadm reset重置7.1 CPU核数小于最低要求2C(内存也必须在2G以上)

解决方法:因为是虚拟机,直接修改内存和核心数即可

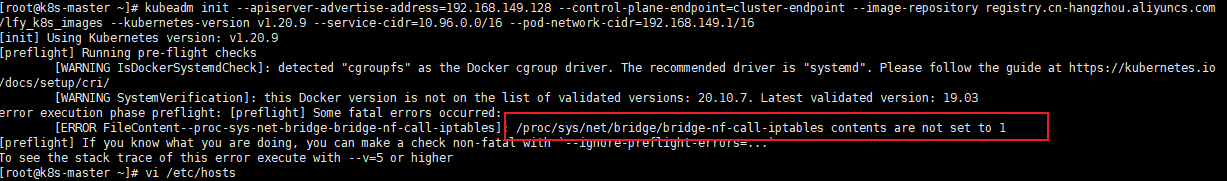

7.2 Calico设置桥接数

#[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1#解决方法:将桥接数设置为1 echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

7.3 Calico版本和kubernetes版本不兼容

报错信息:

[root@k8s-master ~]$ curl https://docs.projectcalico.org/manifests/calico.yaml -O[root@k8s-master ~]$ kubectl apply -f calico.yamlconfigmap/calico-config createdcustomresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org createdcustomresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org createdclusterrole.rbac.authorization.k8s.io/calico-kube-controllers createdclusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers createdclusterrole.rbac.authorization.k8s.io/calico-node createdclusterrolebinding.rbac.authorization.k8s.io/calico-node createddaemonset.apps/calico-node createdserviceaccount/calico-node createddeployment.apps/calico-kube-controllers createdserviceaccount/calico-kube-controllers createderror: unable to recognize "calico.yaml": no matches for kind "PodDisruptionBudget" in version "policy/v1"报错原因:Calico与kubernetes版本不兼容

https://projectcalico.docs.tigera.io/archive/v3.20/getting-started/kubernetes/requirements

解决方案:

[root@k8s-master ~]$ kubectl versionClient Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.9", GitCommit:"7a576bc3935a6b555e33346fd73ad77c925e9e4a", GitTreeState:"clean", BuildDate:"2021-07-15T21:01:38Z", GoVersion:"go1.15.14", Compiler:"gc", Platform:"linux/amd64"}Server Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.9", GitCommit:"7a576bc3935a6b555e33346fd73ad77c925e9e4a", GitTreeState:"clean", BuildDate:"2021-07-15T20:56:38Z", GoVersion:"go1.15.14", Compiler:"gc", Platform:"linux/amd64"} curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -O7.4 calico更改配置后,k8s配置未更新

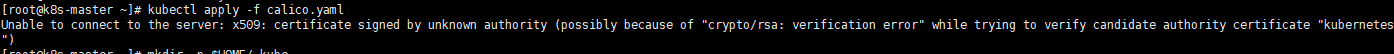

报错信息:

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

报错原因:

该问题由配置未更新造成(之前的配置还在)

解决方案:

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config#再次执行即可 kubectl apply -f calico.yaml